Setting up a Content Crawler Job to retrieve Sitemaps

The following guide describes how to set up a crawler job for getting content of a basic Sitemap where the source includes RDFa.

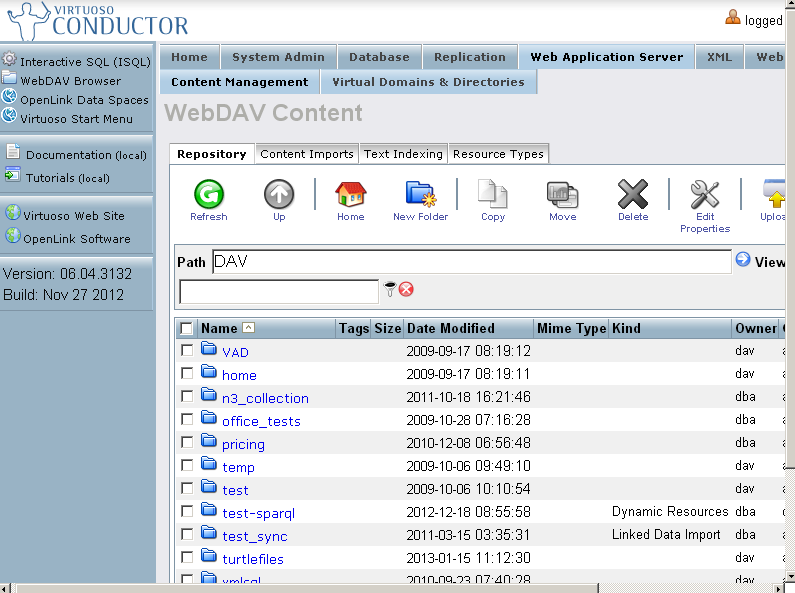

- From the Virtuoso Conductor User Interface i.e. http://cname:port/conductor, login as the "dba" user.

- Go to "Web Application Server" tab.

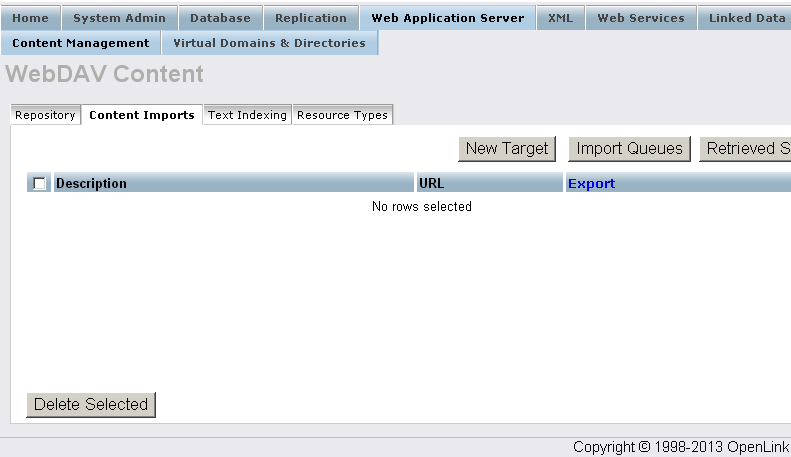

- Go to the "Content Imports" tab.

- Click on the "New Target" button.

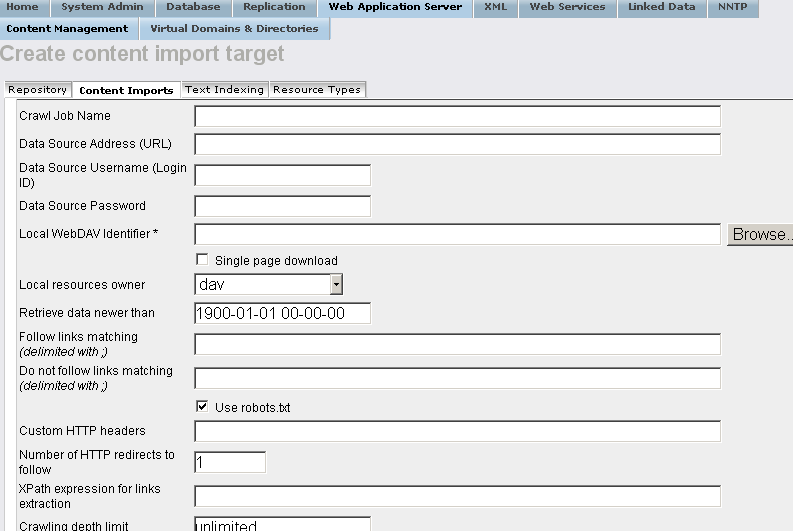

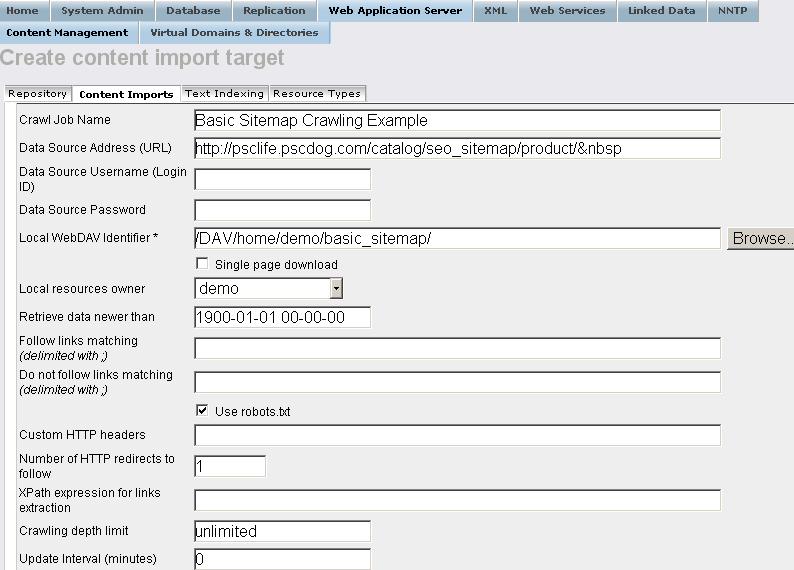

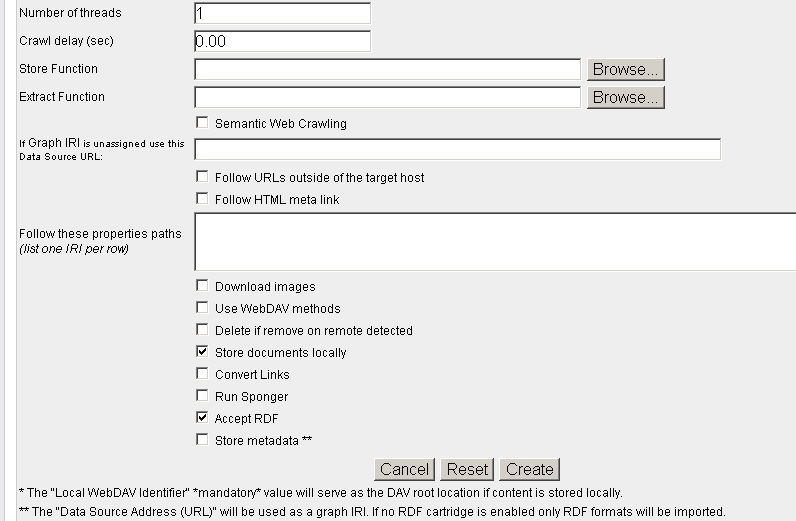

- In the form displayed:

- Enter a name of choice in the "Crawl Job Name" text-box:

Basic Sitemap Crawling Example

- Enter the URL of the site to be crawled in the "Data Source Address (URL)" text-box:

http://psclife.pscdog.com/catalog/seo_sitemap/product/

- Enter the location in the Virtuoso WebDAV repository the crawled should stored in the "Local WebDAV Identifier" text-box, for example, if user demo is available, then:

/DAV/home/demo/basic_sitemap/

- Choose the "Local resources owner" for the collection from the list-box available, for ex: user demo.

- Select the "Accept RDF" check-box.

- Enter a name of choice in the "Crawl Job Name" text-box:

- Click the "Create" button to create the import:

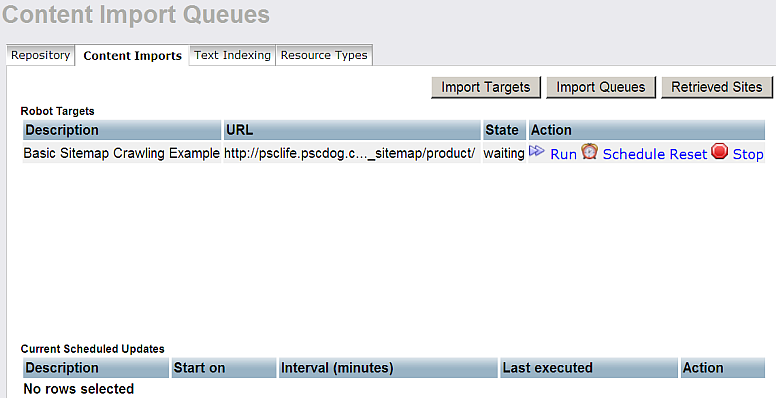

- Click the "Import Queues" button.

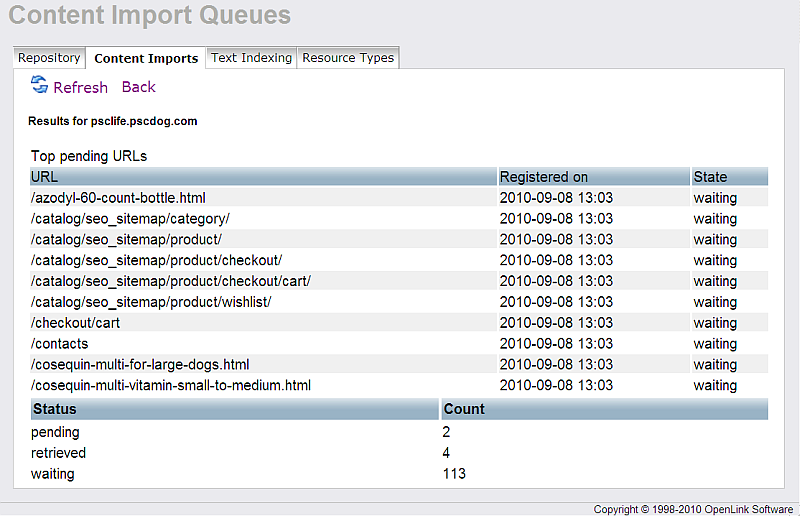

- For the "Robot targets" with label "Basic Sitemap Crawling Example " click the "Run" button.

- This will result in the Target site being crawled and the retrieved pages stored locally in DAV and any sponged triples in the RDF Quad store.

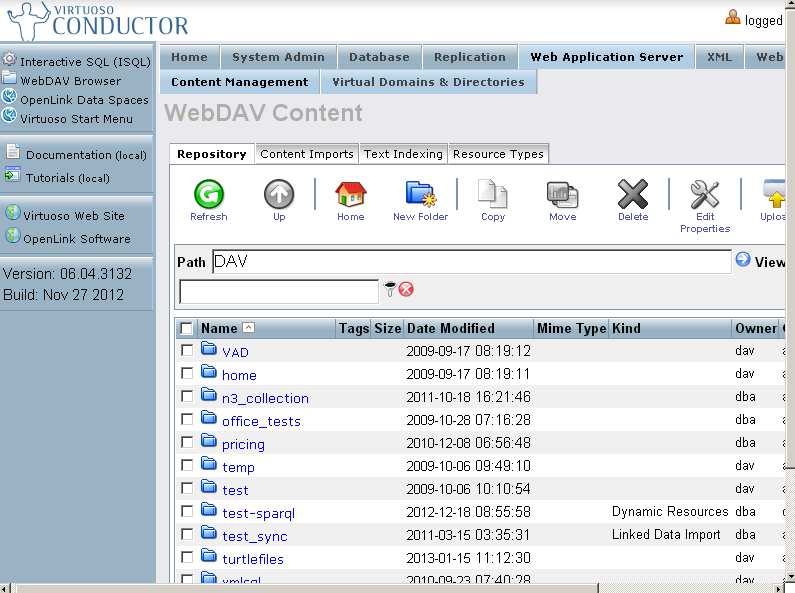

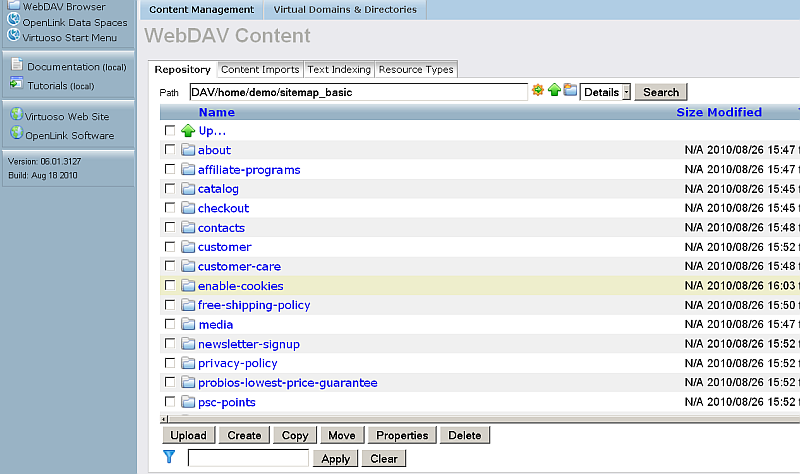

- Go to the "Web Application Server" -> "Content Management" tab.

- Navigate to the location of newly created DAV collection:

/DAV/home/demo/basic_sitemap/

- The retrieved content will be available in this location.

Related

- Setting up Crawler Jobs Guide using Conductor

- Setting up a Content Crawler Job to Add RDF Data to the Quad Store

- Setting up a Content Crawler Job to Retrieve Semantic Sitemaps (a variation of the standard sitemap)

- Setting up a Content Crawler Job to Retrieve Content from Specific Directories

- Setting up a Content Crawler Job to Retrieve Content from SPARQL endpoint